kubeadm安装kubernets1.22.1集群

IP规划

| 节点 | IP | 系统版本 | 软件版本 |

|---|---|---|---|

| VIP | 10.10.200.112 | ||

| k8s-master | 10.10.200.106 | Centos7.9.2009 | Docker-ce:20.10.8Keepalived:1.3.5Etcd:3.5.0kubectl:v1.22.1kubeadm:v1.22.1 |

| k8s-master1 | 10.10.200.107 | Centos7.9.2009 | Docker-ce:20.10.8Keepalived:1.3.5Etcd:3.5.0kubectl:v1.22.1kubeadm:v1.22.1 |

| k8s-master2 | 10.10.200.108 | Centos7.9.2009 | Docker-ce:20.10.8Keepalived:1.3.5Etcd:3.5.0kubectl:v1.22.1kubeadm:v1.22.1 |

| k8s-node | 10.10.200.109 | Centos7.9.2009 | kubectl:v1.22.1kubeadm:v1.22.1 |

| k8s-node2 | 10.10.200.110 | Centos7.9.2009 | kubectl:v1.22.1kubeadm:v1.22.1 |

| k8s-node3 | 10.10.200.111 | kubectl:v1.22.1 | kubectl:v1.22.1kubeadm:v1.22.1 |

基本环境准备

系统内核升级

$ uname -a

Linux k8s-master 3.10.0-1160.25.1.el7.x86_64 #1 SMP Wed Apr 28 21:49:45 UTC 2021 x86_64 x86_64 x86_64 GNU/Linux

$rpm -Uvh http://www.elrepo.org/elrepo-release-7.0-3.el7.elrepo.noarch.rpm

# 安装完成后检查 /boot/grub2/grub.cfg 中对应内核 menuentry 中是否包含 initrd16 配置,如果没有,再安装一次!

$yum --enablerepo=elrepo-kernel install -y kernel-lt

# 设置开机从新内核启动

$grub2-set-default 0

设置主机名

$ hostnamectl set-hostname k8s-master

设置主机名互相解析,通过/etc/hosts文件

10.10.200.106 k8s-master

10.10.200.107 k8s-master1

10.10.200.108 k8s-master2

10.10.200.109 k8s-node1

10.10.200.110 k8s-node2

10.10.200.111 k8s-node3

确保MAC地址唯一

ip link

确保product_uuid唯一

# root @ k8s-master2 in ~ [17:01:50]

$ cat /sys/class/dmi/id/product_uuid

2CBC2442-B52B-7DBA-D5BA-09A5CD5D24B4

- 确保iptables工具不使用nftables(新的防火墙配置工具)

- 禁用selinux

开启ipv4转发功能

$ echo '1' > /proc/sys/net/ipv4/ip_forward

开启 ipvs

cat > /etc/sysconfig/modules/ipvs.modules <<EOF

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

EOF

chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep -e ip_vs -e nf_conntrack_ipv4

yum install -y nfs-utils ipset ipvsadm

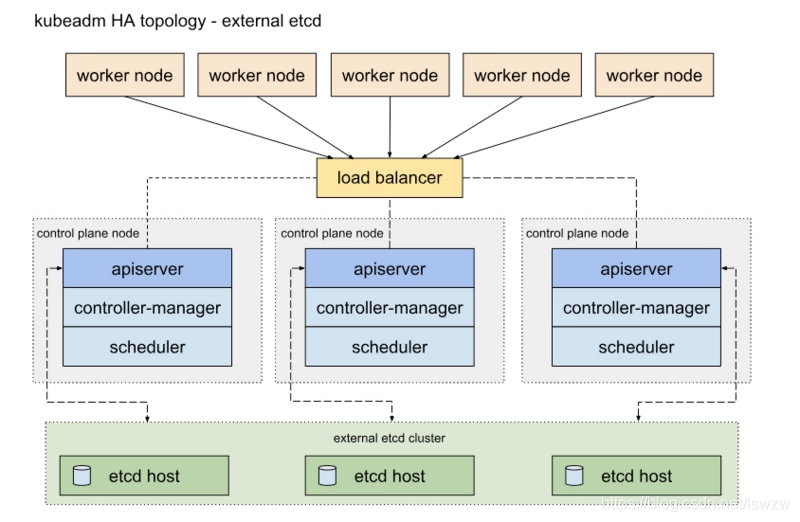

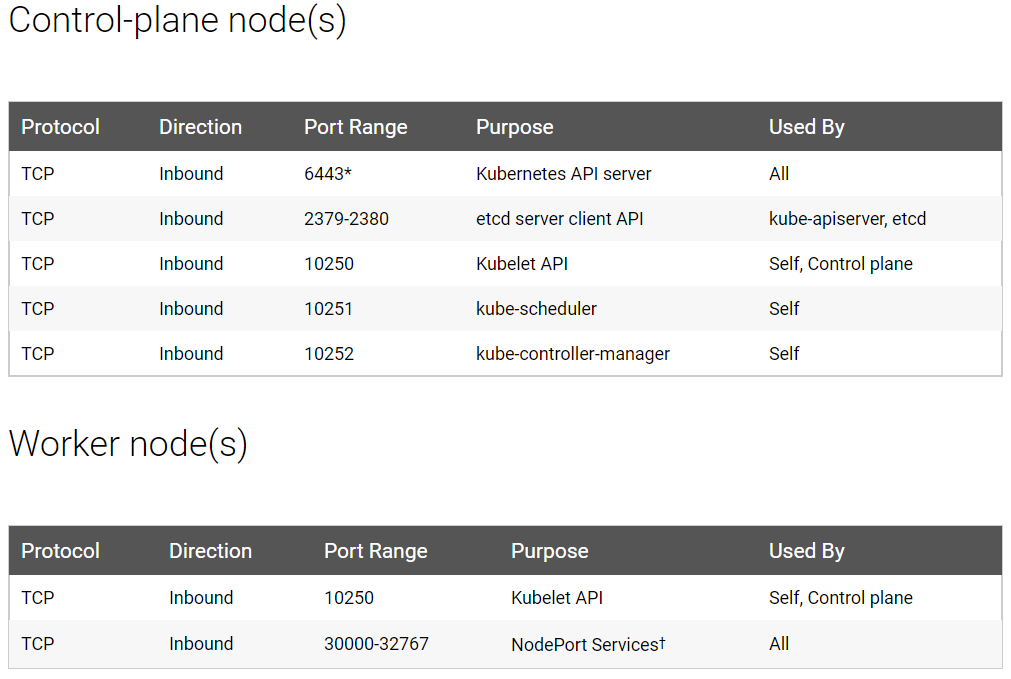

防火墙放行相应端口(或关闭防火墙)

$ firewall-cmd --list-all

public (active)

target: default

icmp-block-inversion: no

interfaces: ens33

sources:

services: dhcpv6-client ssh

ports: 6443/tcp 2379-2380/tcp 10250-10252/tcp 10250/tcp 30000-32767/tcp

protocols:

masquerade: no

forward-ports:

source-ports:

icmp-blocks:

rich rules:

关闭SElinux

setenforce 0

sed -i "s/SELINUX=enforcing/SELINUX=disabled/g" /etc/selinux/config

关闭swap分区

$ sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab

$ swapoff -a

修改 /etc/sysctl.conf

modprobe br_netfilter

cat <<EOF > /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

vm.swappiness=0

EOF

sysctl -p /etc/sysctl.d/k8s.conf

# 设置 yum repository

yum install -y yum-utils \

device-mapper-persistent-data \

lvm2

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

# 安装并启动 docker

yum install -y docker-ce-18.09.8 docker-ce-cli-18.09.8 containerd.io

# 添加ipvs支持

ETCD集群

下载分发二进制文件

$wget https://github.com/coreos/etcd/releases/download/v3.4.3/etcd-v3.4.3-linux-amd64.tar.gz

$tar -xvf etcd-v3.4.3-linux-amd64.tar.gz

export MASTER_IPS=(10.10.200.106 10.10.200.107 10.10.200.108)

export NODE_IPS=(10.10.200.109 10.10.200.110 10.10.200.111)

export ETCD_NODES="k8s-master=https://10.10.200.106:2380,k8s-master=https://10.10.200.107:2380,k8s-master=https://10.10.200.108:2380"

export MASTER_NAMES=(k8s-master k8s-master1 k8s-master2)

export ETCD_DATA_DIR="/data/k8s/etcd/data"

export ETCD_WAL_DIR="/data/k8s/etcd/wal"

for node_ip in ${MASTER_IPS[@]}

do

echo ">>> ${node_ip}"

scp etcd-v3.4.3-linux-amd64/etcd* root@${node_ip}:/opt/k8s/bin

ssh root@${node_ip} "chmod +x /opt/k8s/bin/*"

done

签发证书

cat > etcd-csr.json <<EOF

{

"CN": "etcd",

"hosts": [

"127.0.0.1",

"172.27.138.251",

"172.27.137.229",

"172.27.138.239"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "k8s",

"OU": "moresec"

}

]

}

EOF

cfssl gencert -ca=/opt/k8s/work/ca.pem \

-ca-key=/opt/k8s/work/ca-key.pem \

-config=/opt/k8s/work/ca-config.json \

-profile=kubernetes etcd-csr.json | cfssljson -bare etcd

ls etcd*pem

分发证书

for node_ip in ${MASTER_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "mkdir -p /etc/etcd/cert"

scp etcd*.pem root@${node_ip}:/etc/etcd/cert/

done

创建分发Unit文件

cd /opt/k8s/work

source /opt/k8s/bin/environment.sh

cat > etcd.service.template <<EOF

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

Documentation=https://github.com/coreos

[Service]

Type=notify

WorkingDirectory=${ETCD_DATA_DIR}

ExecStart=/opt/k8s/bin/etcd \\

--data-dir=${ETCD_DATA_DIR} \\

--wal-dir=${ETCD_WAL_DIR} \\

--name=##NODE_NAME## \\

--cert-file=/etc/etcd/cert/etcd.pem \\

--key-file=/etc/etcd/cert/etcd-key.pem \\

--trusted-ca-file=/etc/kubernetes/cert/ca.pem \\

--peer-cert-file=/etc/etcd/cert/etcd.pem \\

--peer-key-file=/etc/etcd/cert/etcd-key.pem \\

--peer-trusted-ca-file=/etc/kubernetes/cert/ca.pem \\

--peer-client-cert-auth \\

--client-cert-auth \\

--listen-peer-urls=https://##NODE_IP##:2380 \\

--initial-advertise-peer-urls=https://##NODE_IP##:2380 \\

--listen-client-urls=https://##NODE_IP##:2379,http://127.0.0.1:2379 \\

--advertise-client-urls=https://##NODE_IP##:2379 \\

--initial-cluster-token=etcd-cluster-0 \\

--initial-cluster=${ETCD_NODES} \\

--initial-cluster-state=new \\

--auto-compaction-mode=periodic \\

--auto-compaction-retention=1 \\

--max-request-bytes=33554432 \\

--quota-backend-bytes=6442450944 \\

--heartbeat-interval=250 \\

--election-timeout=2000

Restart=on-failure

RestartSec=5

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

# 通过模板创建文件

cd /opt/k8s/work

source /opt/k8s/bin/environment.sh

for (( i=0; i < 3; i++ ))

do

sed -e "s/##NODE_NAME##/${NODE_NAMES[i]}/" -e "s/##NODE_IP##/${NODE_IPS[i]}/" etcd.service.template > etcd-${NODE_IPS[i]}.service

done

# 分发

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

scp etcd-${node_ip}.service root@${node_ip}:/etc/systemd/system/etcd.service

done

# 启动服务

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "mkdir -p ${ETCD_DATA_DIR} ${ETCD_WAL_DIR}"

ssh root@${node_ip} "systemctl daemon-reload && systemctl enable etcd && systemctl restart etcd " &

done

查看状态

# root @ k8s-master in ~ [11:00:45]

$ etcdctl \

-w table --cacert=/etc/kubernetes/cert/ca.pem \

--cert=/etc/etcd/cert/etcd.pem \

--key=/etc/etcd/cert/etcd-key.pem \

--endpoints=${etcd} endpoint status

+----------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

| ENDPOINT | ID | VERSION | DB SIZE | IS LEADER | IS LEARNER | RAFT TERM | RAFT INDEX | RAFT APPLIED INDEX | ERRORS |

+----------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

| https://10.10.200.106:2379 | acc56010801fe0e6 | 3.5.0 | 26 MB | true | false | 6 | 8620013 | 8620013 | |

| https://10.10.200.107:2379 | be0a6ca6c1806a66 | 3.5.0 | 26 MB | false | false | 6 | 8620013 | 8620013 | |

| https://10.10.200.108:2379 | ef83c3c8d263d353 | 3.5.0 | 26 MB | false | false | 6 | 8620013 | 8620013 | |

+----------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

自编译Kubeadm修改证书签发日期

$ go version

go version go1.17 linux/amd64

$ git clone https://github.com/kubernetes/kubernetes.git

# vim ./staging/src/k8s.io/client-go/util/cert/cert.go

NotAfter: now.Add(duration365d * 10).UTC(), //默认为10年,修改为100年,不能超过1000年

# vim ./cmd/kubeadm/app/constants/constants.go

CertificateValidity = time.Hour * 24 * 365 * 100 //改为这里的值

集群初始化

- defaults配置

kubeadm config print init-defaults > kubeadm-init.yaml

修改后配置文件

cat >kubeadm-config.yaml << EOF

apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 10.10.200.106 # 本机ip

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

name: k8s-master # 本机hostname

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns:

type: CoreDNS

etcd:

external:

endpoints:

- https://10.10.200.106:2379

- https://10.10.200.107:2379

- https://10.10.200.108:2379

caFile: /etc/kubernetes/cert/ca.pem #搭建etcd集群时生成的ca证书

certFile: /etc/etcd/cert/etcd.pem #搭建etcd集群时生成的客户端证书

keyFile: /etc/etcd/cert/etcd-key.pem #搭建etcd集群时生成的客户端密钥

imageRepository: registry.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: v1.22.1

controlPlaneEndpoint: 10.10.200.112:6443 # 主master地址(keepalived高可用中写vip节点或 nginx本地代理127.0.0.1)

networking:

dnsDomain: cluster.local

podSubnet: 10.244.0.0/16

serviceSubnet: 10.96.0.0/12

scheduler: {}

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: "ipvs"

EOF

Master节点启动

# root @ k8s-master in /opt/k8s [22:14:26]

$ kubeadm init --config=kubeadm-config.yaml

[init] Using Kubernetes version: v1.22.1

[preflight] Running pre-flight checks

[WARNING Firewalld]: firewalld is active, please ensure ports [6443 10250] are open or your cluster may not function correctly

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [k8s-master kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 10.10.200.106 10.10.200.112]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] External etcd mode: Skipping etcd/ca certificate authority generation

[certs] External etcd mode: Skipping etcd/server certificate generation

[certs] External etcd mode: Skipping etcd/peer certificate generation

[certs] External etcd mode: Skipping etcd/healthcheck-client certificate generation

[certs] External etcd mode: Skipping apiserver-etcd-client certificate generation

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 10.510578 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.22" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node k8s-master as control-plane by adding the labels: [node-role.kubernetes.io/master(deprecated) node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

[mark-control-plane] Marking the node k8s-master as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: abcdfe.0123456789abcdef

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of control-plane nodes by copying certificate authorities

and service account keys on each node and then running the following as root:

kubeadm join 10.10.200.112:6443 --token abcdfe.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:8c702a0fd84ad6be5643f11429ba27198ebfebaa3f37a9b64a7817ea75e21440 \

--control-plane

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 10.10.200.112:6443 --token abcdfe.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:8c702a0fd84ad6be5643f11429ba27198ebfebaa3f37a9b64a7817ea75e21440

- 根据提示创建响应目录

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

export KUBECONFIG=/etc/kubernetes/admin.conf

- 报错CoreDNS镜像拉取错误

docker pull coredns/coredns:1.8.4

docker tag coredns/coredns:1.8.4 registry.aliyuncs.com/google_containers/coredns:v1.8.4

相应节点join

Master节点加入

keepalived

! Configuration File for keepalived

vrrp_instance VI_1 {

state MASTER #BACKUP上修改为BACKUP

interface eth0 #主网卡信息

virtual_router_id 44 #虚拟路由标识,主从相同

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111 #主从认证密码必须一致

}

virtual_ipaddress { #虚拟IP(VIP)

192.168.100.240

}

}

virtual_server 192.168.100.240 6443 { #对外虚拟IP地址

delay_loop 6 #检查真实服务器时间,单位秒

lb_algo rr #设置负载调度算法,rr为轮训

lb_kind DR #设置LVS负载均衡NAT模式

protocol TCP #使用TCP协议检查realserver状态

real_server 192.168.100.241 6443 { #第一个节点

weight 3 #节点权重值

TCP_CHECK { #健康检查方式

connect_timeout 3 #连接超时

nb_get_retry 3 #重试次数

delay_before_retry 3 #重试间隔/S

}

}

real_server 192.168.100.242 6443 { #第二个节点

weight 3

TCP_CHECK {

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

}

分发证书文件

$ ansible master -m copy -a 'src=/etc/kubernetes/pki/ dest=/etc/kubernetes/pki/ owner=root group=root mode=0644'

10.10.200.107 | CHANGED => {

"changed": true,

"dest": "/etc/kubernetes/pki/",

"src": "/etc/kubernetes/pki/"

}

10.10.200.108 | CHANGED => {

"changed": true,

"dest": "/etc/kubernetes/pki/",

"src": "/etc/kubernetes/pki/"

将引导文件分发到各个master节点分别引导(修改IP与hostname)

# root @ k8s-master in /opt/k8s [22:24:05]

$ scp /opt/k8s/kubeadm-config.yaml root@k8s-master1:/opt/k8s

kubeadm-config.yaml 100% 1236 789.8KB/s 00:00

# root @ k8s-master in /opt/k8s [22:24:13]

$ scp /opt/k8s/kubeadm-config.yaml root@k8s-master2:/opt/k8s

kubeadm-config.yaml 100% 1236 794.7KB/s 00:00

# root @ k8s-master1 in /opt/k8s [22:38:04]

$ kubeadm init --config=kubeadm-config.yaml

[init] Using Kubernetes version: v1.22.1

[preflight] Running pre-flight checks

# root @ k8s-master2 in /opt [22:35:14]

$ kubeadm init --config=k8s.yaml

[init] Using Kubernetes version: v1.22.1

[preflight] Running pre-flight checks

Node节点加入

# root @ k8s-node3 in ~ [22:47:32]

$ kubeadm join 10.10.200.112:6443 --token abcdfe.0123456789abcdef \

> --discovery-token-ca-cert-hash sha256:8c702a0fd84ad6be5643f11429ba27198ebfebaa3f37a9b64a7817ea75e21440

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

- node节点加入超时解决方案(参考链接)

swapoff -a # will turn off the swap

kubeadm reset

systemctl daemon-reload

systemctl restart kubelet

iptables -F && iptables -t nat -F && iptables -t mangle -F && iptables -X # will reset iptables

[

检查集群状态

# root @ k8s-master in /opt/k8s [22:46:07] C:1

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master NotReady control-plane,master 32h v1.22.1

k8s-master1 NotReady control-plane,master 11m v1.22.1

k8s-master2 NotReady control-plane,master 14m v1.22.1

k8s-node1 NotReady <none> 18s v1.22.1

k8s-node2 NotReady <none> 14s v1.22.1

k8s-node3 NotReady <none> 2m19s v1.22.1

安装插件

CNI-Calico网络

- 下载并修改配置文件

curl https://docs.projectcalico.org/manifests/calico.yaml -O

#修改为Pod网段

- name: CALICO_IPV4POOL_CIDR

value: "10.244.0.0/16"

- name: IP_AUTODETECTION_METHOD

value: "interface=ens*"

- 应用

$ kubectl apply -f calico.yaml

configmap/calico-config unchanged

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org configured

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers unchanged

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers unchanged

clusterrole.rbac.authorization.k8s.io/calico-node unchanged

clusterrolebinding.rbac.authorization.k8s.io/calico-node unchanged

daemonset.apps/calico-node created

serviceaccount/calico-node created

deployment.apps/calico-kube-controllers created

serviceaccount/calico-kube-controllers created

Warning: policy/v1beta1 PodDisruptionBudget is deprecated in v1.21+, unavailable in v1.25+; use policy/v1 PodDisruptionBudget

poddisruptionbudget.policy/calico-kube-controllers created

- 查看当前集群状态以及calicoPOD状态

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master Ready control-plane,master 44h v1.22.1

k8s-master1 Ready control-plane,master 12h v1.22.1

k8s-master2 Ready control-plane,master 12h v1.22.1

k8s-node1 Ready <none> 11h v1.22.1

k8s-node2 Ready <none> 11h v1.22.1

k8s-node3 Ready <none> 11h v1.22.1

$ kubectl get pods -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

calico-kube-controllers-58497c65d5-jzmwx 0/1 CrashLoopBackOff 3 (37s ago) 4m13s 10.244.235.195 k8s-master <none> <none>

calico-node-2q6q9 1/1 Running 0 4m14s 10.10.200.107 k8s-master1 <none> <none>

calico-node-65lcv 1/1 Running 0 4m14s 10.10.200.108 k8s-master2 <none> <none>

calico-node-hthv7 1/1 Running 0 4m13s 10.10.200.111 k8s-node3 <none> <none>

calico-node-n7frv 0/1 Running 0 4m14s 10.10.200.106 k8s-master <none> <none>

calico-node-vgzqh 1/1 Running 0 4m13s 10.10.200.109 k8s-node1 <none> <none>

calico-node-vwf4w 1/1 Running 0 4m13s 10.10.200.110 k8s-node2 <none> <none>

coredns-7f6cbbb7b8-25rjw 0/1 Running 0 44h 10.244.235.193 k8s-master <none> <none>

coredns-7f6cbbb7b8-wq5nn 0/1 Running 0 44h 10.244.235.194 k8s-master <none> <none>

kube-apiserver-k8s-master 1/1 Running 10 12h 10.10.200.106 k8s-master <none> <none>

kube-apiserver-k8s-master1 1/1 Running 0 12h 10.10.200.107 k8s-master1 <none> <none>

kube-apiserver-k8s-master2 1/1 Running 0 12h 10.10.200.108 k8s-master2 <none> <none>

kube-controller-manager-k8s-master 1/1 Running 2 44h 10.10.200.106 k8s-master <none> <none>

kube-controller-manager-k8s-master1 1/1 Running 0 12h 10.10.200.107 k8s-master1 <none> <none>

kube-controller-manager-k8s-master2 1/1 Running 0 12h 10.10.200.108 k8s-master2 <none> <none>

kube-proxy-c6bpd 1/1 Running 0 11h 10.10.200.111 k8s-node3 <none> <none>

kube-proxy-dc6vx 1/1 Running 0 11h 10.10.200.109 k8s-node1 <none> <none>

kube-proxy-g4h2z 1/1 Running 0 12h 10.10.200.107 k8s-master1 <none> <none>

kube-proxy-nbmgf 1/1 Running 0 11h 10.10.200.110 k8s-node2 <none> <none>

kube-proxy-qckpk 1/1 Running 0 12h 10.10.200.108 k8s-master2 <none> <none>

kube-proxy-rz4tf 1/1 Running 2 44h 10.10.200.106 k8s-master <none> <none>

kube-scheduler-k8s-master 1/1 Running 10 44h 10.10.200.106 k8s-master <none> <none>

kube-scheduler-k8s-master1 1/1 Running 0 12h 10.10.200.107 k8s-master1 <none> <none>

kube-scheduler-k8s-master2 1/1 Running 0 12h 10.10.200.108 k8s-master2 <none> <none>

Dashboard可视化操作插件

- 下载官方yaml文件并修改

wget -c https://raw.githubusercontent.com/kubernetes/dashboard/master/aio/deploy/recommended.yaml

##....省略很多内容

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

type: NodePort ##开发服务为NodePort

ports:

- port: 443

targetPort: 8443

nodePort: 32443 ##访问端口

selector:

k8s-app: kubernetes-dashboard

##.....省略很多内容

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin ##修改名字为集群管理员

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

$ kubectl apply -f recommended.yaml

namespace/kubernetes-dashboard unchanged

serviceaccount/kubernetes-dashboard unchanged

service/kubernetes-dashboard created

secret/kubernetes-dashboard-certs created

secret/kubernetes-dashboard-csrf created

secret/kubernetes-dashboard-key-holder created

configmap/kubernetes-dashboard-settings created

role.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

deployment.apps/kubernetes-dashboard created

service/dashboard-metrics-scraper created

deployment.apps/dashboard-metrics-scraper created

- token

kubectl create sa dashboard-admin -n kube-system

kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin

ADMIN_SECRET=$(kubectl get secrets -n kube-system | grep dashboard-admin | awk '{print $1}')

DASHBOARD_LOGIN_TOKEN=$(kubectl describe secret -n kube-system ${ADMIN_SECRET} | grep -E '^token' | awk '{print $2}')

echo ${DASHBOARD_LOGIN_TOKEN}

kubectl create sa dashboard-admin -n kube-system

kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin

ADMIN_SECRET=$(kubectl get secrets -n kube-system | grep dashboard-admin | awk '{print $1}')

DASHBOARD_LOGIN_TOKEN=$(kubectl describe secret -n kube-system ${ADMIN_SECRET} | grep -E '^token' | awk '{print $2}')

echo ${DASHBOARD_LOGIN_TOKEN}

生成kubeconfig

# 设置集群参数

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/cert/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=dashboard.kubeconfig

# 设置客户端认证参数,使用上面创建的 Token

kubectl config set-credentials dashboard_user \

--token=${DASHBOARD_LOGIN_TOKEN} \

--kubeconfig=dashboard.kubeconfig

# 设置上下文参数

kubectl config set-context default \

--cluster=kubernetes \

--user=dashboard_user \

--kubeconfig=dashboard.kubeconfig

# 设置默认上下文

kubectl config use-context default --kubeconfig=dashboard.kubeconfig

kube-prometheus

- 拉去yaml文件

git clone https://github.com/coreos/kube-prometheus.git

cd kube-prometheus/

sed -i -e 's_quay.io_quay.mirrors.ustc.edu.cn_' manifests/*.yaml manifests/setup/*.yaml # 使用中科大的 Registry

kubectl apply -f manifests/setup # 安装 prometheus-operator

kubectl apply -f manifests/ # 安装 promethes metric adapter

FAQ

删除ns一直Terminating

kubectl delete ns xxx --grace-period=0 --force

kubectl get namespace ingress-nginx -o json > ingress-nginx.json

kubectl proxy --port=8081 &

curl -k -H "Content-Type: application/json" -X PUT --data-binary @ingress-nginx.json http://127.0.0.1:8081/api/v1/namespaces/ingress-nginx/finalize

节点污点去除

# 查看

$ kubectl describe node k8s-master2 |grep Taint

# 去除

$kubectl taint nodes k8s-node2 check:NoExecute-

# 设置

$kubectl taint nodes k8s-node2 check=yuanzhang:NoExecute

错误check-ignore-label.gatekeeper.sh

# root @ k8s-master1 in /opt/rook/cluster/examples/kubernetes/ceph on git:master x [14:05:42] C:1

$ kubectl create -f crds.yaml -f common.yaml -f operator.yaml

Warning: policy/v1beta1 PodSecurityPolicy is deprecated in v1.21+, unavailable in v1.25+

Error from server (AlreadyExists): error when creating "crds.yaml": customresourcedefinitions.apiextensions.k8s.io "cephblockpools.ceph.rook.io" already exists

Error from server (AlreadyExists): error when creating "crds.yaml": customresourcedefinitions.apiextensions.k8s.io "cephclients.ceph.rook.io" already exists

Error from server (AlreadyExists): error when creating "crds.yaml": customresourcedefinitions.apiextensions.k8s.io "cephclusters.ceph.rook.io" already exists

Error from server (AlreadyExists): error when creating "crds.yaml": customresourcedefinitions.apiextensions.k8s.io "cephfilesystemmirrors.ceph.rook.io" already exists

Error from server (AlreadyExists): error when creating "crds.yaml": customresourcedefinitions.apiextensions.k8s.io "cephfilesystems.ceph.rook.io" already exists

Error from server (AlreadyExists): error when creating "crds.yaml": customresourcedefinitions.apiextensions.k8s.io "cephnfses.ceph.rook.io" already exists

Error from server (AlreadyExists): error when creating "crds.yaml": customresourcedefinitions.apiextensions.k8s.io "cephobjectrealms.ceph.rook.io" already exists

Error from server (AlreadyExists): error when creating "crds.yaml": customresourcedefinitions.apiextensions.k8s.io "cephobjectstores.ceph.rook.io" already exists

Error from server (AlreadyExists): error when creating "crds.yaml": customresourcedefinitions.apiextensions.k8s.io "cephobjectstoreusers.ceph.rook.io" already exists

Error from server (AlreadyExists): error when creating "crds.yaml": customresourcedefinitions.apiextensions.k8s.io "cephobjectzonegroups.ceph.rook.io" already exists

Error from server (AlreadyExists): error when creating "crds.yaml": customresourcedefinitions.apiextensions.k8s.io "cephobjectzones.ceph.rook.io" already exists

Error from server (AlreadyExists): error when creating "crds.yaml": customresourcedefinitions.apiextensions.k8s.io "cephrbdmirrors.ceph.rook.io" already exists

Error from server (AlreadyExists): error when creating "crds.yaml": customresourcedefinitions.apiextensions.k8s.io "objectbucketclaims.objectbucket.io" already exists

Error from server (AlreadyExists): error when creating "crds.yaml": customresourcedefinitions.apiextensions.k8s.io "objectbuckets.objectbucket.io" already exists

Error from server (InternalError): error when creating "common.yaml": Internal error occurred: failed calling webhook "check-ignore-label.gatekeeper.sh": Post "https://gatekeeper-webhook-service.moresec-system.svc:443/v1/admitlabel?timeout=3s": dial tcp 10.109.210.72:443: connect: connection refused

Error from server (AlreadyExists): error when creating "common.yaml": clusterrolebindings.rbac.authorization.k8s.io "rook-ceph-object-bucket" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterroles.rbac.authorization.k8s.io "rook-ceph-cluster-mgmt" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterroles.rbac.authorization.k8s.io "rook-ceph-system" already exists

Error from server (NotFound): error when creating "common.yaml": namespaces "rook-ceph" not found

Error from server (AlreadyExists): error when creating "common.yaml": clusterroles.rbac.authorization.k8s.io "rook-ceph-global" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterroles.rbac.authorization.k8s.io "rook-ceph-mgr-cluster" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterroles.rbac.authorization.k8s.io "rook-ceph-object-bucket" already exists

Error from server (NotFound): error when creating "common.yaml": namespaces "rook-ceph" not found

Error from server (NotFound): error when creating "common.yaml": namespaces "rook-ceph" not found

Error from server (AlreadyExists): error when creating "common.yaml": clusterrolebindings.rbac.authorization.k8s.io "rook-ceph-system" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterrolebindings.rbac.authorization.k8s.io "rook-ceph-global" already exists

Error from server (NotFound): error when creating "common.yaml": namespaces "rook-ceph" not found

Error from server (NotFound): error when creating "common.yaml": namespaces "rook-ceph" not found

Error from server (NotFound): error when creating "common.yaml": namespaces "rook-ceph" not found

Error from server (NotFound): error when creating "common.yaml": namespaces "rook-ceph" not found

Error from server (AlreadyExists): error when creating "common.yaml": clusterroles.rbac.authorization.k8s.io "rook-ceph-osd" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterroles.rbac.authorization.k8s.io "rook-ceph-mgr-system" already exists

Error from server (NotFound): error when creating "common.yaml": namespaces "rook-ceph" not found

Error from server (NotFound): error when creating "common.yaml": namespaces "rook-ceph" not found

Error from server (NotFound): error when creating "common.yaml": namespaces "rook-ceph" not found

Error from server (NotFound): error when creating "common.yaml": namespaces "rook-ceph" not found

Error from server (NotFound): error when creating "common.yaml": namespaces "rook-ceph" not found

Error from server (NotFound): error when creating "common.yaml": namespaces "rook-ceph" not found

Error from server (AlreadyExists): error when creating "common.yaml": clusterrolebindings.rbac.authorization.k8s.io "rook-ceph-mgr-cluster" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterrolebindings.rbac.authorization.k8s.io "rook-ceph-osd" already exists

Error from server (NotFound): error when creating "common.yaml": namespaces "rook-ceph" not found

Error from server (AlreadyExists): error when creating "common.yaml": podsecuritypolicies.policy "00-rook-privileged" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterroles.rbac.authorization.k8s.io "psp:rook" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterrolebindings.rbac.authorization.k8s.io "rook-ceph-system-psp" already exists

Error from server (NotFound): error when creating "common.yaml": namespaces "rook-ceph" not found

Error from server (NotFound): error when creating "common.yaml": namespaces "rook-ceph" not found

Error from server (NotFound): error when creating "common.yaml": namespaces "rook-ceph" not found

Error from server (NotFound): error when creating "common.yaml": namespaces "rook-ceph" not found

Error from server (NotFound): error when creating "common.yaml": namespaces "rook-ceph" not found

Error from server (NotFound): error when creating "common.yaml": namespaces "rook-ceph" not found

Error from server (NotFound): error when creating "common.yaml": namespaces "rook-ceph" not found

Error from server (NotFound): error when creating "common.yaml": namespaces "rook-ceph" not found

Error from server (AlreadyExists): error when creating "common.yaml": clusterroles.rbac.authorization.k8s.io "cephfs-csi-nodeplugin" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterroles.rbac.authorization.k8s.io "cephfs-external-provisioner-runner" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterrolebindings.rbac.authorization.k8s.io "rook-csi-cephfs-plugin-sa-psp" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterrolebindings.rbac.authorization.k8s.io "rook-csi-cephfs-provisioner-sa-psp" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterrolebindings.rbac.authorization.k8s.io "cephfs-csi-nodeplugin" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterrolebindings.rbac.authorization.k8s.io "cephfs-csi-provisioner-role" already exists

Error from server (NotFound): error when creating "common.yaml": namespaces "rook-ceph" not found

Error from server (NotFound): error when creating "common.yaml": namespaces "rook-ceph" not found

Error from server (NotFound): error when creating "common.yaml": namespaces "rook-ceph" not found

Error from server (NotFound): error when creating "common.yaml": namespaces "rook-ceph" not found

Error from server (AlreadyExists): error when creating "common.yaml": clusterroles.rbac.authorization.k8s.io "rbd-csi-nodeplugin" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterroles.rbac.authorization.k8s.io "rbd-external-provisioner-runner" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterrolebindings.rbac.authorization.k8s.io "rook-csi-rbd-plugin-sa-psp" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterrolebindings.rbac.authorization.k8s.io "rook-csi-rbd-provisioner-sa-psp" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterrolebindings.rbac.authorization.k8s.io "rbd-csi-nodeplugin" already exists

Error from server (AlreadyExists): error when creating "common.yaml": clusterrolebindings.rbac.authorization.k8s.io "rbd-csi-provisioner-role" already exists

Error from server (NotFound): error when creating "common.yaml": namespaces "rook-ceph" not found

Error from server (NotFound): error when creating "common.yaml": namespaces "rook-ceph" not found

Error from server (NotFound): error when creating "common.yaml": namespaces "rook-ceph" not found

Error from server (NotFound): error when creating "operator.yaml": namespaces "rook-ceph" not found

Error from server (NotFound): error when creating "operator.yaml": namespaces "rook-ceph" not found

# root @ k8s-master1 in /opt/rook/cluster/examples/kubernetes/ceph on git:master x [14:07:53]

$ kubectl -n rook-ceph get pod

No resources found in rook-ceph namespace.

# root @ k8s-master1 in /opt/rook/cluster/examples/kubernetes/ceph on git:master x [14:08:38]

$ kubectl create namespace rook-ceph

Error from server (InternalError): Internal error occurred: failed calling webhook "check-ignore-label.gatekeeper.sh": Post "https://gatekeeper-webhook-service.moresec-system.svc:443/v1/admitlabel?timeout=3s": dial tcp 10.109.210.72:443: connect: connection refused

# root @ k8s-master1 in /opt/rook/cluster/examples/kubernetes/ceph on git:master x [14:09:06] C:1

$ kubectl create namespace rook-ceph

Error from server (InternalError): Internal error occurred: failed calling webhook "check-ignore-label.gatekeeper.sh": Post "https://gatekeeper-webhook-service.moresec-system.svc:443/v1/admitlabel?timeout=3s": dial tcp 10.109.210.72:443: connect: connection refused

# root @ k8s-master1 in /opt/rook/cluster/examples/kubernetes/ceph on git:master x [14:09:17] C:1

$ kubectl get cert-manager-webhook

error: the server doesn't have a resource type "cert-manager-webhook"

# root @ k8s-master1 in /opt/rook/cluster/examples/kubernetes/ceph on git:master x [14:15:50] C:1

$ kubectl get validatingwebhookconfigurations.admissionregistration.k8s.io

NAME WEBHOOKS AGE

gatekeeper-validating-webhook-configuration 2 14d

mutatingwebhookconfiguration.admissionregistration.k8s.io

# root @ k8s-master1 in /opt/rook/cluster/examples/kubernetes/ceph on git:master x [14:16:22]

$ kubectl delete validatingwebhookconfigurations.admissionregistration.k8s.io gatekeeper-validating-webhook-configuration

validatingwebhookconfiguration.admissionregistration.k8s.io "gatekeeper-validating-webhook-configuration" deleted

清理集群

kubeadm reset

systemctl stop kubelet

systemctl stop docker

rm -rf /var/lib/cni/

rm -rf /var/lib/kubelet/*

rm -rf /etc/cni/

ifconfig cni0 down

ifconfig flannel.1 down

ifconfig docker0 down

ip link delete cni0

ip link delete flannel.1

systemctl start docker

- 原文作者:老鱼干🦈

- 原文链接://www.tinyfish.top:80/post/kubernets/2.kubeadm%E5%AE%89%E8%A3%85kubernets1.22.1%E9%9B%86%E7%BE%A4/

- 版权声明:本作品采用知识共享署名-非商业性使用-禁止演绎 4.0 国际许可协议. 进行许可,非商业转载请注明出处(作者,原文链接),商业转载请联系作者获得授权。